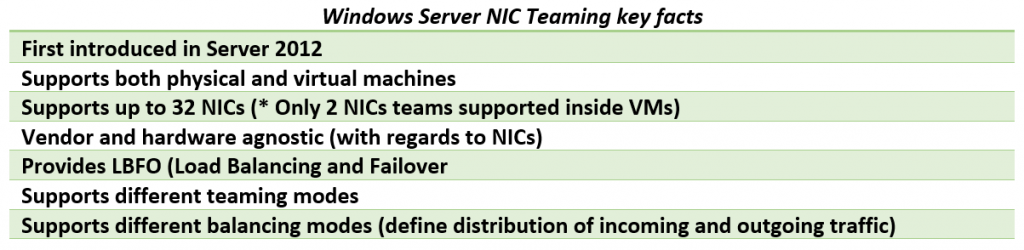

NIC teaming is not something we got with Windows Server 2016 but I just find it interesting to review this functionality as we have it in the current iteration of Windows Server, as usual, touching a bit on basics and history of this feature.

NIC teaming feature reached its maturity in Server 2012 R2 and there are (almost) no major changes in this department in Server 2016, yet if you just starting out with a practical use of NIC teaming on prepping for any related Microsoft exam you may find it useful to review this feature thoroughly.

Link Teaming was first introduced as OOB feature in Windows Server 2012 with intent to add simple, affordable traffic reliability and load balancing for server workloads. First-hand explanations of pros of this feature by Don Stanwyck, who was the program manager of the NIC teaming feature back then can be found here. But long story short, this feature gained special importance because of an arrival of virtualization and high-density workloads: knowing how many services can be run inside of multiple VMs hosted by Hyper-V host you just cannot afford connectivity loss which impacts all of them. This is why we got NIC teaming: to safeguard against the range of failures from plain accidental network cable disconnect to NIC or switch failures. The same workflow density pushes for increased bandwidth too.

In a nutshell, Windows Server NIC teaming provides hardware-independent bandwidth aggregation and transparent failover suitable both for physical and virtualized servers. Before Server 2012 you had to rely on hardware/driver based NIC teaming which obliged you to buy NICs from the same vendor and rely on third party software to make this magic happen. This approach had some major shortcomings, e.g.: you were not able to mix NICs from different vendors in the team, and each vendor has its own approach to management of teaming and such implementations also lacked remote management features – basically it was not simple enough. Once Windows Server NIC teaming was introduced hardware specific teaming solutions fell out of common use as it no longer makes sense for hardware vendors reinvent the wheel/offer functionality which is better handled by OOB Windows feature which simply supports any WHQL certified Ethernet NIC.

Like I said earlier, Windows Server 2016 have not introduced any major changes to NIC Teaming except for Switch Embedded Teaming which is positioned to be the future way for teaming in Windows. Switch Embedded Teaming or SET is an alternative NIC Teaming solution that you can use in environments that include Hyper-V and the Software Defined Networking (SDN) stack in Windows Server 2016. SET integrates some NIC Teaming functionality into the Hyper-V Virtual Switch.

On the LBFO teaming side Server 2016 added ability to change LACP timer between a slow and fast one – which may be important for those who use Cisco switches (there seems to be an update allowing you to do the same in Server 2012 R2).

Gains and benefits of Windows Server NIC teaming are somewhat obvious, but what can give you a hard time (especially if you in for some Microsoft exams) is attempt to wrap your head around all possible NIC teaming modes and their combinations. So let’s see if we can sort out all these teaming modes which naturally can be then configured both via GUI and PowerShell.

Let’s start with teaming modes as it is first step/decision to make when configuring NIC teaming. There are three teaming modes to choose from: Switch Independent and two Switch Dependent modes – LACP and static.

Switch Independent mode means we don’t need to configure anything on the switch and everything is handled by Windows Server. You can attach your NICs to different switches in this mode. In this mode all outbound traffic will be load balanced across the physical NICs in the team (exactly how will depend on load balancing mode), but incoming traffic won’t be load balanced as in this case switches are unaware about NICs team (this is important distinction which sometimes takes a while to spot especially if you frame your question to Google as something like “LACP VS switch independent performance” or something along these lines).

Switch dependent modes require configuring a switch to make it aware of NICs team and NICs have to be connected to the same switch. Once again, quoting official documentation: “Switch dependent teaming requires that all team members are connected to the same physical switch or a multi-chassis switch that shares a switch ID among the multiple chassis.”

There are a lot of switches allowing you to try this at home, for example, I have quite affordable yet good D-Link DGS-1100-18 switch from DGS-1100 Series of their Gigabit Smart Switches. This switch has support for 802.3ad Link Aggregation supporting up to 9 groups per device and 8 ports per group and costs around 100 EUR at this point in time.

Static teaming mode requires us to configure the individual switch ports and connect cables to specific switch ports and this is why it is called static configuration: we assign static ports here and if you connect a cable to different port you’ll break your team.

LACP stands for Link Aggregation Control Protocol and essentially it is the more dynamic version of switch dependent teaming meaning that with if you configure the switch rather than individual ports. Onc the switch is configured it becomes aware about your network team and handles dynamic negotiation of the ports.

In both of switch dependent teaming modes your switches aware about NICs team and hence can load balance incoming network traffic.

To control how load distribution handled we have a range of load balancing modes (you can think about them as “load balancing algorithms”) which includes: address hash, Hyper-V port and Dynamic mode which was first introduced in Server 2012 R2.

Address hash mode uses attributes of network traffic (IP address, port and MAC address) to determine to which specific NIC traffic should be sent.

Hyper-V port load balancing ties a VM to specific NIC in the team, which may work well for Hyper-V host with numerous VMs on it so that you distribute their NIC traffic across multiple NICs in your team but if you have just a few VMs this may not be your best choice as it leads to underutilization of NICs within the team.

Dynamic is default option introduced in Server 2012 R2 which is recommended by Microsoft and gives you advantages of both previously mentioned algorithms: it uses address hashing for outbound and Hyper-V port balancing for inbound with additional logic built into it to rebalance in case of failures/traffic breaks within the team.

So now we covered things you supposed to know to make informed NIC teaming configuration decisions or take Microsoft exams, let’s have a look at practical side of configuring this.

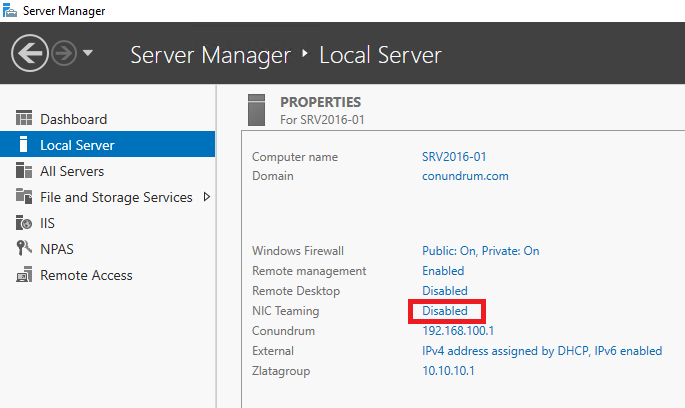

GUI way to manage NIC teams available through Server Manager – you just click on Disabled link for NIC teaming to start with configuration process:

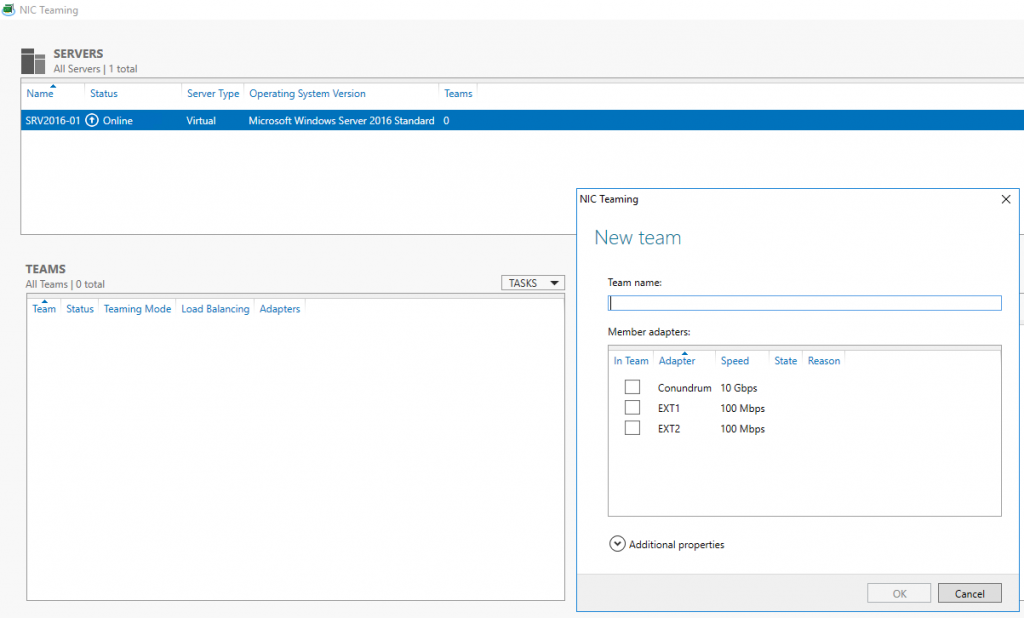

This will get you into NIC teaming console where things are straightforward and easy (providing you read the first half of the article:

In case you are doing this VM you may notice that your choices in Additional properties of New team dialog may be limited to switch independent mode and Address Hash distribution mode, so that two first drop downs are greyed out/inactive – this simply means that we have to review what’s supported and required when it comes to NIC teams inside VMs. From supportability point of view, only two member NIC teams are supported by Microsoft, and NICs participating in the team have to be connected to two different external Virtual Switches (which means different physical adapter). And with all these requirements met inside VMs NIC Teams must have their Teaming mode configured as Switch Independent and Load Balancing mode for the NIC Team in a VM must be configured with the Address Hash distribution mode.

After looking at these limitations it is tempting to say that it is better to go for configuring NIC teaming on Hyper-V host itself and just don’t use it inside VM. But I bet there may be the use case for doing teaming inside of VM – just can’t think of anything right now (if you do – just let me know in comments to this blog post).

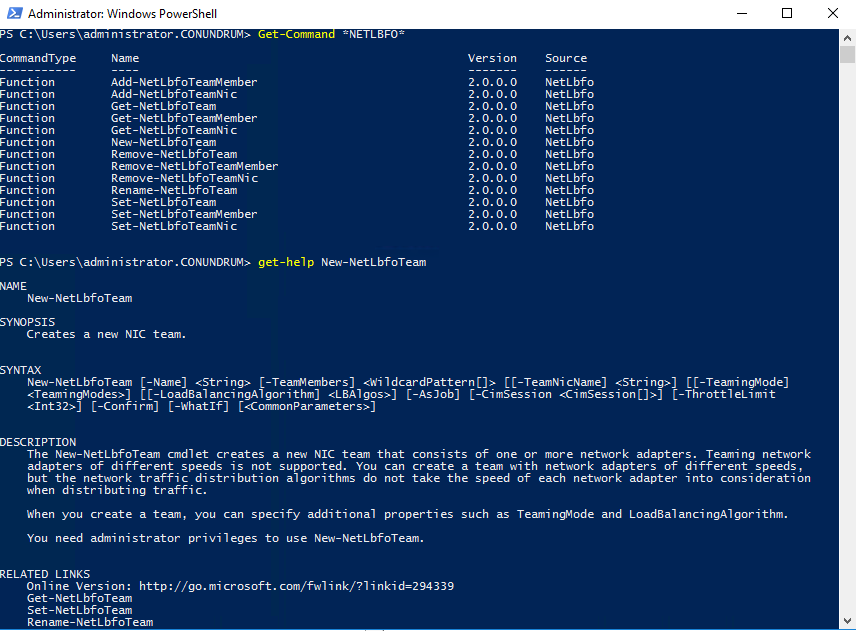

In case you want to manage NET teaming via PowerShell you can get all required information in NetLbfo Module documentation on TechNet, or just issue Get-Command *NETLbfo* and further on leverage Get-Help as necessary:

I think this blog post provided enough information to get you started with NIC teaming especially if you have not read anything on it just yet.

Got a project that needs expert IT support?

From Linux and Microsoft Server to VMware, networking, and more, our team at CR Tech is here to help.

Get personalized support today and ensure your systems are running at peak performance or make sure that your project turns out to be a successful one!

CONTACT US NOW